SEO Optimization: Key Practices for web Developers

SEO (Search Engine Optimization) is the process of improving a website’s technical structure, content quality, and user experience to meet search engine ranking criteria, thereby increasing its ranking in natural search results. Good SEO can bring precise exposure and a continuous flow of traffic, bringing significant benefits to products. So, as web developers, what SEO optimization points should we pay special attention to in our daily work? Let’s analyze through the following key dimensions:

TDK Optimization

Traditionally, TDK consists of Title (page title), Meta Description (page description), and Meta Keywords. However, Google does not use the keywords meta tag for web page ranking, so we only need to consider the first two.

Title

<title itemprop="name">Mastering Deep Copy in JavaScript: From Basics to an Industrial-Grade Solution | cris-web</title>

Titles are usually calculated by pixels, and Google typically displays the first 50-60 characters of the title tag. This is because the display limit is based on the width of the characters and the available space on the search engine results page (SERP). Titles exceeding this length may be truncated and display an ellipsis. Currently, there is no hard limit on the length of title tags, and based on the content that can be displayed on the SERP, it is often recommended to have 50-70 characters.

Meta

In the field of SEO, the <meta> element is an extremely important element, and its importance involves multiple aspects.

Description

The description is an important attribute of the <meta> element, which can help search engines better understand the content of the web page. The description tag does not affect ranking but does affect the click-through rate.

<meta name="description" content="Learn the key differences between default, async, and defer script loading methods in web development. Optimize your website's speed and user experience by selecting the best script loading strategy.">

The traditional view is that title tags should be kept within 55-60 characters, but now some people think this limit is a bit outdated, and some SEO experts believe that title tags can be up to 70 characters long.

Open Graph Protocol

Open Graph protocol tags are implemented through OG tags, which can be used to identify the type and elements of a web page, allowing content shared to social networks to be effectively crawled, and also controlling the content displayed on the shared website cards. Although it does not affect search rankings, it can increase click-through rates.

<meta property="og:url" content="https://blog.crisweb.com/posts/async-vs-defer-vs-default-choosing-the-right-script-loading-method/">

<meta property="og:site_name" content="cris-web">

<meta property="og:title" content="Async vs Defer vs Default: Choosing the Right Script Loading Method">

<meta property="og:description" content="Learn the key differences between default, async, and defer script loading methods in web development. Optimize your website's speed and user experience by selecting the best script loading strategy.">

<meta property="og:locale" content="en_us">

<meta property="og:type" content="article">

Robots Strategy Definition

<meta name="robots" content="index, follow">

The values for the content attribute are numerous and can be displayed as follows:

- index: Allow crawling.

- follow: Allow tracking of links on the page.

- nofollow: Do not allow crawling of links on the page.

- noarchive: Prevent the cached copy of this page from being available in search results.

- nosnippet: Prevent the description from appearing below the page in search results and prevent the cached page.

- all: Equivalent to index and follow.

- none: Do not allow crawling and displaying in search results, nor allow crawling of links on the page. Equivalent to setting noindex and nofollow simultaneously.

Website Quality

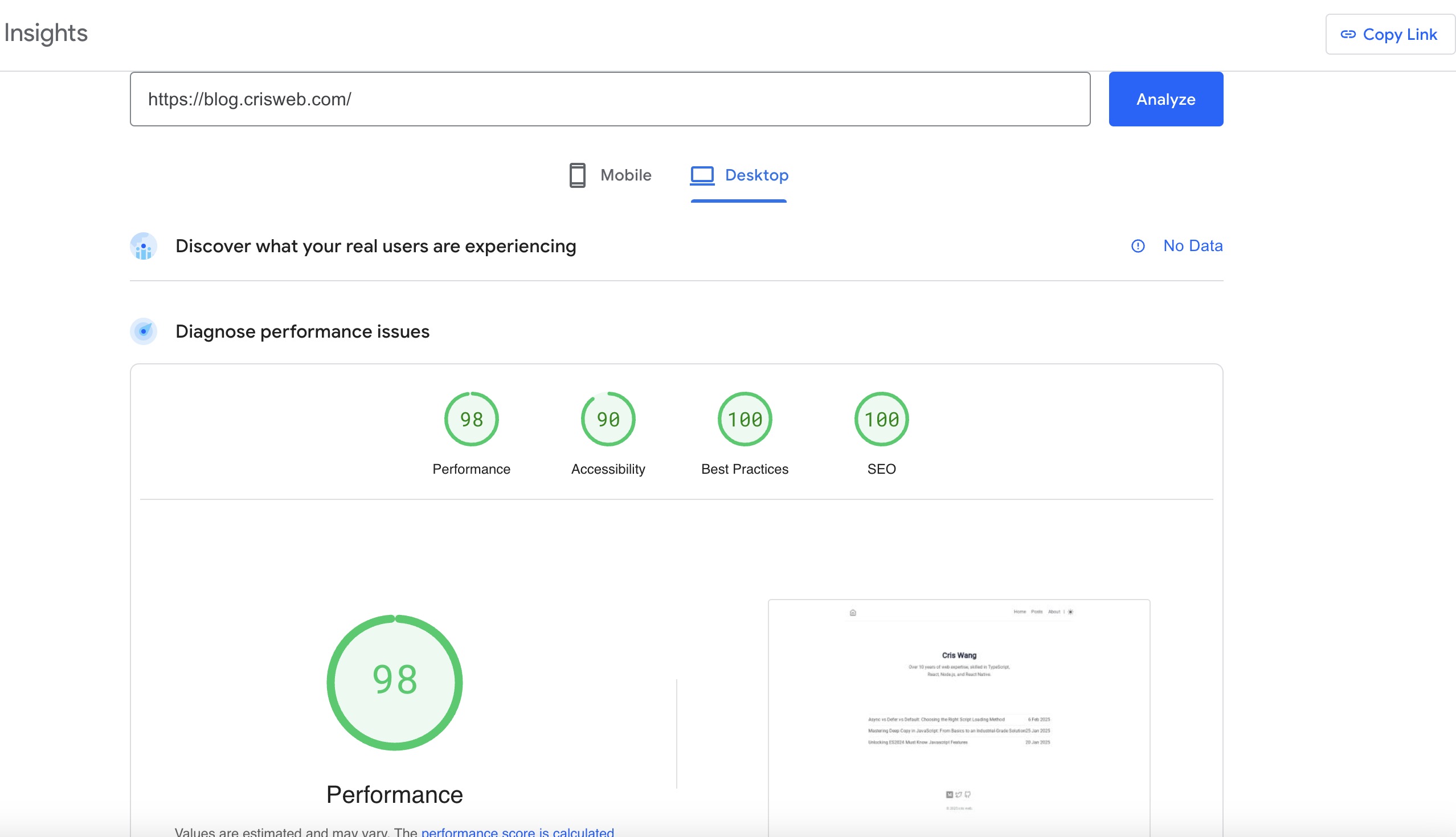

Ensure Page Performance

Search engines consider a high bounce rate as a poor user experience, which negatively affects SEO rankings. Slow website loading speeds can lead to user loss, increased bounce rates, and thus impact the SEO performance of the website. Additionally, slow page responses can reduce user satisfaction, decrease the time users spend on the website, and reduce interactions, all of which are considered negative signals by search engines and affect the website’s ranking. Therefore, a website that Google considers to be of high quality should also have excellent page performance.

In fact, the performance of any website should be tested with tools like Lighthouse before going live, and only those that achieve a certain score should be allowed to go live.

Although Google supports content rendered dynamically with JavaScript, it is not always perfect, especially for complex single-page applications (SPAs), which can lead to incomplete indexing. It is recommended that developers test the renderability of key content in the Search Console’s “URL Inspection” tool to ensure crawlability. Additionally, the first-screen loading performance issues of SPAs or MPAs can still have a certain impact on SEO.

Semantic HTML

Using semantic HTML to build web pages reflects the website developer’s attention to content structure and user experience, making the website appear more professional and authoritative in the eyes of search engines. Search engines generally prefer to recommend web pages with high quality and good structure, and semantic HTML helps improve the overall ranking and credibility of the website in search results. Here are some common semantic HTML tags:

Document Structure Tags

<header>: Represents the header information of a page or content area, usually including titles, navigation links, and other summary information.<nav>: Defines the navigation link area on a page, typically containing one or more link lists to guide users in browsing the website.<main>: Contains the main content of a page, which is the most important content area on a page.<footer>: Represents the footer information of a page or content area.

Content Grouping Tags

<section>: Represents a section or content grouping within a document.<article>: Represents an independent, complete content unit.<aside>: Used to mark auxiliary content.

Text Semantic Tags

<figure>and<figcaption>:<figure>is used to wrap charts, code, etc., and<figcaption>provides explanations.<mark>: Used to mark or highlight text. Other Semantic Tags<h1> - <h6>: Define headings at different levels, where the<h1>tag is usually used in coordination with the<title>tag, which is beneficial for search engine optimization.<p>: Defines a paragraph.<strong>and<em>: Emphasize text, with<strong>indicating stronger emphasis.<cite>: Represents a citation of a reference.<blockquote>: Defines a block quote, which has its own space.

Internal and External Links

First, let’s distinguish between internal and external links on a website:

- Internal links: Links from one page on your website to another page. Internal links form a web-like structure within the website, maximizing the breadth and depth of spider crawling.

- External links: Links from other websites to your website. External links increase website authority and traffic. When adding internal and external links, pay attention to the use of the nofollow and external attributes in the a tag.

<a rel="nofollow" href="https://www.xxxx.com/">fake website</a>

The nofollow attribute on a link tells search engines to ignore this link, preventing them from following the page and avoiding the dispersion of authority. This attribute only affects search engines and is a purely SEO optimization tag.

Use cases:

- Blocking spam links, such as external links in website comments, links left by users in forums, etc.

- When the external link content is unrelated to your site or is unstable and of poor quality, it is recommended to use nofollow.

- When the density of internal links is too high and the page importance is low, nofollow can be used. Adding high-quality external links appropriately is beneficial for SEO and can have a positive impact. The key lies in the quality and quantity of external links.

Canonical URL

By adding a <link> tag in the <head> section of HTML, you can help search engines identify the “canonical version” of a web page, thereby avoiding duplicate content issues.

This is very effective in the following scenarios: Multiple URLs pointing to the same content. In paginated content, such as blog list pages. As a best practice for standardization, even if a page does not have duplicate content, we still need to add a Canonical URL pointing to itself.

<link rel="canonical" href="https://blog.crisweb.com" />

Additionally, we need to follow the following best practices: Ensure that the Canonical URL returns an HTTP 200 status code. Do not define multiple Canonical tags on the same page. Avoid pointing to circular or invalid links. If a page has permanently moved, prioritize using a 301 redirect instead of a Canonical URL.

Website Redirection

Website redirection passes the weight of the old URL to the new URL, helping search engines and users correctly identify content migration, thereby reducing traffic loss and maintaining search rankings. It is important to note that redirection is divided into permanent and temporary redirection. A 301 redirect is a permanent redirect with better weight transfer, while a 302 redirect is a temporary redirect with poorer weight transfer.

Therefore, if it is a temporary adjustment (such as a promotional page), we should use a 302 redirect to prevent incorrect weight transfer. On the contrary, if there is a website overhaul, URL structure change, domain replacement, or content migration, we need to use a permanent redirect.

robots File

The robots file controls the crawling behavior of search engine crawlers (such as Googlebot), directly affecting the indexing coverage and crawling efficiency of the website.

Common robots Configuration Scenarios

- User-agent: The value of this item describes the name of the search engine spider. If the value is set to *, the protocol is valid for any robot.

- Disallow: The value of this item describes a URL, directory, or entire website that is not to be accessed. URLs starting with Disallow will not be accessed by search engines. An empty Disallow record indicates that all parts of the website are allowed to be accessed.

// Basic Configuration

User-agent: *

Disallow:

Sitemap: https://blog.crisweb.com/sitemap.xml

// Blocking all crawlers

User-agent: *

Disallow: /

// Allowing specific crawlers and blocking others

User-agent: Googlebot

Allow: / # Allow Google to crawl the entire site

User-agent: *

Disallow: / # Block other crawlers

// Blocking sensitive directories or files

User-agent: *

Disallow: /admin/ # Backend management directory

Disallow: /tmp/ # Temporary files

Disallow: /login.php # Login page

Disallow: /search? # Search pages with parameters

sitemap Site Map

The configuration items of sitemap.xml define how search engines discover and crawl the pages of a website. Properly configuring sitemap.xml can maximize the crawling efficiency of search engines. Here are the core configurations:

- loc: Required, specifies the full URL of the page.

- lastmod: Optional, marks the last modification time of the page.

- changefreq: Optional, the update frequency of the page, which does not affect the actual crawling frequency.

- priority: Optional, priority from 0 to 1.0, increasing gradually.

For specific content types, such as multilingual, video, image, and news content, sitemap.xml has different configurations. For more details, see the following links: image-sites